New GStreamer ElevenLabs speech synthesis plugin

From Centricular Devlog by Mathieu Duponchelle (Centricular)Back in June '25, I implemented a new speech synthesis element using the ElevenLabs API.

In this post I will briefly explain some of the design choices I made, and provide one or two usage examples.

POST vs. WSS

ElevenLabs offers two interfaces for speech synthesis:

Either open a websocket and feed the service small chunks of text (eg words) to receive a continuous audio stream

Or POST longer segments of text to receive independent audio fragments

The websocket API is well-adapted to conversational use cases, and can offer the lowest latency, but isn't the most well-suited to the use cases I was targeting: my goal was to use it to synthesize audio from text that was first transcribed, then translated from an original input audio stream.

In this situation we have two constraints we need to be mindful of:

For translation purposes we need to construct large enough text segments prior to translating, in order for the translation service to operate with enough context to do a good job.

Once audio has been synthesized, we might also need to resample it in order to have it fit within the original duration of the speech.

Given that:

The latency benefits from using the websocket API are largely negated by the larger text segments we would use as the input

Resampling the continuous stream we would receive to make sure individual words are time-shifted back to the "correct" position, while possible thanks to the

sync_alignmentoption, would have increased the complexity of the resulting element

I chose to use the POST API for this element. We might still choose to implement a websocket-based version if there is a good story for using GStreamer in a conversational pipeline, but that is not on my radar for now.

Additionally, we already have a speech synthesis element around the AWS Polly API which is also POST-based, so both elements can share a similar design.

Audio resampling

As mentioned previously, the ElevenLabs API does not offer direct control over the duration of the output audio.

For instance, you might be dubbing speech from a fast speaker with a slow voice, potentially causing the output audio to drift out of sync.

To address this, the element can optionally make use of signalsmith_stretch to resample the audio in a pitch-preserving manner.

When the feature is enabled it can be used through the overflow=compress property.

The effect can sometimes be pretty jarring for very short input, so an extra property is also exposed to allow some tolerance for drift: max-overflow. It represents the maximum duration by which the audio output is allow to drift out of sync, and does a good job using up intervals of silence between utterances.

Voice cloning

The ElevenLabs API exposes a pretty powerful feature, Instant Voice Cloning. It can be used to create a custom voice that will sound very much like a reference voice, requiring only a handful of seconds to a few minutes of reference audio data to produce useful results.

Using the multilingual model, that newly-cloned voice can even be used to generate convincing speech in a different language.

A typical pipeline for my target use case can be represented as (pseudo gst-launch):

input_audio_src ! transcriber ! translator ! synthesizer

When using a transcriber element such as speechmaticstranscriber, speaker "diarization" (fancy word for detection) can be used to determine when a given speaker was speaking, thus making it possible to clone voices even in a multi-speaker situation.

The challenge in this situation however is that the synthesizer element doesn't have access to the original audio samples, as it only deals with text as the input.

I thus decided on the following solution:

input_audio_src ! voicecloner ! transcriber ! .. ! synthesizer

The voice cloner element will accumulate audio samples, then upon receiving custom upstream events from the transcriber element with information about speaker timings it will start cloning voices and trim its internal sample queue.

To be compatible, a transcriber simply needs to send the appropriate events upstream. The speechmaticstranscriber element can be used as a reference.

Finally, once a voice clone is ready, the cloner element sends another event downstream with a mapping of speaker id to voice id. The synthesizer element can then intercept the event and start using the newly-created voice clone.

The cloner element can also be used in single-speaker voice by just setting the speaker property to some identifier and watching for messages on the bus:

gst-launch-1.0 -m -e alsasrc ! audioconvert ! audioresample ! queue ! elevenlabsvoicecloner api-key=$SPEECHMATICS_API_KEY speaker="Mathieu" ! fakesink

Putting it all together

At this year's GStreamer conference I gave a talk where I demo'd these new elements.

This is the pipeline I used then:

AWS_ACCESS_KEY_ID="XXX" AWS_SECRET_ACCESS_KEY="XXX" gst-launch-1.0 uridecodebin uri=file:///home/meh/Videos/spanish-convo-trimmed.webm name=ud \

ud. ! queue max-size-time=15000000000 max-size-bytes=0 max-size-buffers=0 ! clocksync ! autovideosink \

ud. ! audioconvert ! audioresample ! clocksync ! elevenlabsvoicecloner api-key=XXX ! \

speechmaticstranscriber url=wss://eu2.rt.speechmatics.com/v2 enable-late-punctuation-hack=false join-punctuation=false api-key="XXX" max-delay=2500 latency=4000 language-code=es diarization=speaker ! \

queue max-size-time=15000000000 max-size-bytes=0 max-size-buffers=0 ! textaccumulate latency=3000 drain-on-final-transcripts=false extend-duration=true ! \

awstranslate latency=1000 input-language-code="es-ES" output-language-code="en-EN" ! \

elevenlabssynthesizer api-key=XXX retry-with-speed=false overflow=compress latency=3000 language-code="en" voice-id="iCKVfVbyCo5AAswzTkkX" model-id="eleven_multilingual_v2" max-overflow=0 ! \

queue max-size-time=15000000000 max-size-bytes=0 max-size-buffers=0 ! audiomixer name=m ! autoaudiosink audiotestsrc volume=0.03 wave=violet-noise ! clocksync ! m.Watch my talk for the result, or try it yourself (you will need API keys for speechmatics / AWS / elevenlabs)!

From GStreamer News by

From GStreamer News by  From Christian F.K. Schaller by

From Christian F.K. Schaller by

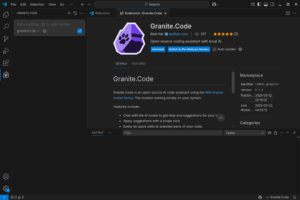

Dialog that gives detailed instructions for how to add G Code

Dialog that gives detailed instructions for how to add G Code

︎

︎